Service development, provisioning, operation and integration into large scale production infrastructures often suffers from a lack of quality assurance realization. This may result from the fact that the different actors involved are either not aware of the benefits of applying quality practices, or not keen to adhere to them as they might increase the burden of software and service development, deployment and management.

Thus, a set of abstract Software Quality Assurance (SQA) criteria1 has been developed and detailed. It describes the quality conventions and best practices that apply to the development phase of a software component. This set of criteria was created, detailed and applied within several software development projects. The criteria is abstract with respect to the services and tools that can be chosen to verify such criteria, while at the same time being pragmatic and focusing on automation of verification and following a DevOps approach.

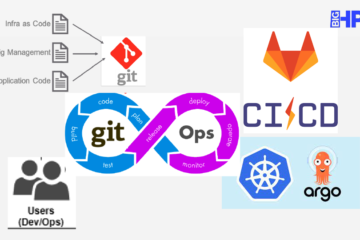

Figure 1: Combining software development with IT operations

The initial effort of creating and developing tests for the verification of such criteria, pays off on the automated execution when code changes are applied, and many bugs are caught early in the development process.

Verification and validation is represented by a pipeline as code following DevOps practices realized as Continuous Integration (CI) and Continuous Delivery (CD). These pipelines consist of a chain of processing elements arranged in stages aimed to investigate whether a software system fulfils the required purpose and satisfies specifications and standards.

Examples of tests that can be automated and part of a CI/CD pipeline, are:

- Unit-testing: isolate each unit of the software to identify, analyze and fix the defects related to the developed code.

- Integration testing: verify the functional, performance, and reliability between the modules that are integrated.

- System testing: black-box testing technique performed to evaluate the system’s compliance against specified requirements in an end-to-end perspective.

- Acceptance testing: evaluate the system’s compliance with the business requirements and verify if it has met the required criteria for delivery to end-users.

- Automated build of artifacts, be it either SW packages such as RPMs and DEBs, or Docker images.

Verification and validation complement each other and the testing workflow starts from verification followed by the validation.

Figure 2: The workflow starts from verification followed by the validation

The components and services integration testing is best achieved with a small infrastructure or Pilot testbed, where all components and services can be deployed (preferably in an automated way), and tested together.

The BigHPC platform can be seen as a collection of interlinked modules (services) that work and interact with each other. Therefore, the Pilot testbed infrastructure has to satisfy the needs of the developers regarding test and integration of all services and components of BigHPC, in order to achieve validation of the platform. The Pilot testbed serves both as a preview for end-users that can execute real-world applications and as a demonstrator for the system administrators that wish to test the management capabilities of the platform.

Figure 3: High-level view of the Pilot testbed as well as the main actors of this infrastructure

Since the platform has a modular architecture, each component or service can be tested and validated together with the remaining services, without impacting the other components. The validation can be performed once a new version of a given component becomes available. The modularization of components should allow an independent deployment of any given component including an upgrade or even downgrade or rollback in case of issues.

The components or services are further divided into stateless and stateful:

- The stateless components can be deployed and configured in any infrastructure, without any dependency on pre-existing data or metadata. The testing of new versions of such components is simplified; the new version can be tested by upgrading to the new version, while in case of errors or bugs, the component can be quickly downgraded or replaced by the previous working version. Category 2 (low-level components) are all stateless.

- In the case of stateful components, such as components that depend on a database, pre-existing data and/or metadata (c.f. the services for the container repositories), a copy should be performed prior to the upgrade of the component. In this way, if some error or bug occurs, and the upgrade implies a change on the database schema, then the component can be downgraded and the backup copy can be restored to a healthy state.

Figure 4: Pilot testbeds depicting the two foreseen infrastructures as well as how the components and services will be deployed

As a conclusion, with the automation and testing facility provided for the deployment and services integration, we hope to bring better practices with lesser effort, fostering the devops key principles adoption: shared ownership, workflow automation and rapid feedback.

Mário David and Samuel Bernardo, LIP

December 16, 2021

1Orviz, Pablo; López García, Álvaro; Duma, Doina Cristina; Donvito, Giacinto; David, Mario; Gomes, Jorge, 2017. A set of common software quality assurance baseline criteria for research projects. CSIC-UC – Instituto de Física de Cantabria (IFCA). http://hdl.handle.net/10261/160086.