HPC centers are no longer solely targeted for highly parallel modeling and simulation tasks. Indeed, the computational power offered by these systems is now being used to support advanced Big Data analytics for fields such as healthcare, agriculture, environmental sciences, smart cities, and fraud detection1,2. By combining both types of computational paradigms, HPC infrastructures will be key for improving the lives of citizens, speeding up scientific breakthroughs in different fields (e.g., health, IoT, biology, chemistry, physics), and increasing the competitiveness of companies. This added value has motivated significant investment from governments and industry in HPC, given the high Return on Investment (ROI) expected from this technology. Namely, in 2015 the HPC market accounted for 11.4 billion dollars while this value will greatly increase in the forthcoming years, forecasting 46.3 billion dollars by 2025.

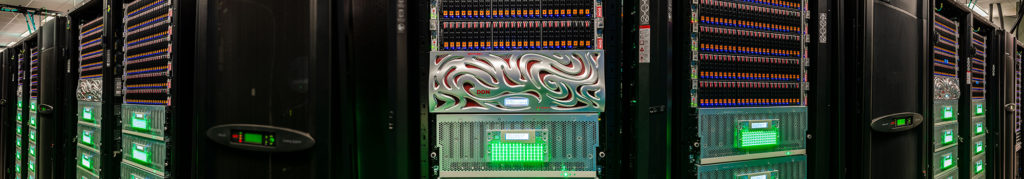

As the utility and usage of HPC infrastructures increases, more computational and storage power is required to efficiently handle data-driven applications. Indeed, many HPC centers are now aiming at exascale supercomputers supporting at least one exaFLOPs (1018 operations per second), which represents a thousandfold increase in processing power over the first petascale computer deployed in 2008 3,4. Although this is a necessary requirement for handling the increasing number of HPC applications, there are several outstanding challenges that still need to be tackled so that this extra computational power can be fully leveraged.

Management of heterogeneous infrastructures and workloads: By adding more compute and storage nodes one is also increasing the complexity of the overall HPC distributed infrastructure and making it harder to monitor and manage. This complexity is increased due to the need of supporting highly heterogeneous applications that translate into different workloads with specific data storage and processing needs 3. For example, on the one hand, traditional scientific modeling and simulation tasks require large slices of computational time, are CPU-bound, and rely on iterative approaches (parametric/stochastic modeling). On the other hand, data-driven analytical applications contemplate shorter computational tasks, that are I/O bound and, in some cases, have real-time response requirements (i.e., latency-oriented). Also, many of these applications leverage AI and machine learning tools that require specific hardware (e.g., GPUs) in order to be efficient.

Support for general-purpose analytics: The increased heterogeneity also demands that HPC infrastructures are now able to support general-purpose applications that were not designed explicitly to run on specialized HPC hardware, which was typically the case for traditional modeling and simulation applications 5. Without such mechanisms, developers are required to significantly change their analytical applications to be deployed in HPC clusters. Also, managing HPC clusters able to support such a broad set of applications becomes a complex problem since these have to support not only the libraries and compilers but also, multiple versions of the same software.

The previous two challenges clearly demand new approaches to infrastructure and data management. Namely, the virtualization of hardware resources and efficient monitoring of highly distributed infrastructures are key points that still need to be improved.

Avoiding the storage bottleneck: As a complementary challenge, by only increasing the computational power and improving the management of HPC infrastructures it may still not be possible to fully harness the capabilities of these infrastructures. In fact, many applications are now data-driven and will require efficient data storage and retrieval (e.g., low latency or/and high throughput) from HPC clusters. With an increasing number of applications and heterogeneous workloads, the storage systems supporting HPC may easily become a bottleneck 3,6. As pointed out by several studies, the storage access time is one of the major bottlenecks limiting the efficiency of current and next-generation HPC infrastructures. If this challenge is not solved, then the effort of increasing the computational power and providing exascale supercomputers is limited.

This is not a trivial challenge as HPC infrastructures are vertically designed, composed by several layers along the I/O path that provide an assortment of compute, network, and storage functionalities (e.g., operating systems, middleware libraries, distributed storage, caches, device drivers). Each of these layers includes a predetermined set of functionalities with strict interfaces and isolated procedures to employ over I/O requests, leveraging a complex, limited, and coarse-grained treatment of the I/O flow. This is a monolithic design that is only efficient for specific applications and hardware.

The BigHPC project aims at addressing the previous three challenges: i) improving the management of heterogeneous HPC infrastructures and workloads; ii) enabling the support for general-purpose analytical applications; and iii) solving the current storage access bottleneck of HPC services. Addressing these challenges is crucial for taking full advantage of the next generation of HPC infrastructures.

Stay tuned for the next blog posts that will discuss each of these challenges in more detail, while delving into the vision and contributions of the BigHPC framework.

BigHPC Consortium

June 8, 2021

1Osseyran, A. and Giles, M. eds., 2015. Industrial applications of high-performance computing: best global practices (Vol. 25). CRC Press.

2Netto, M.A., Calheiros, R.N., Rodrigues, E.R., Cunha, R.L. and Buyya, R., 2018. HPC cloud for scientific and business applications: Taxonomy, vision, and research challenges. ACM Computing Surveys (CSUR), 51(1), p.8.

3Joseph, E., Conway, S., Sorensen, B., Thorp, M., 2017. Trends in the Worldwide HPC Market (Hyperion Presentation). HPC User Forum at HLRS.

4Reed, D.A. and Dongarra, J., 2015. Exascale computing and big data. Communications of the ACM, 58(7), pp.56-68.

5Katal, A., Wazid, M. and Goudar, R.H., 2013, August. Big data: issues, challenges, tools and good practices. In 2013 Sixth international conference on contemporary computing (IC3) (pp. 404-409). IEEE.

6Yildiz, O., Dorier, M., Ibrahim, S., Ross, R. and Antoniu, G., 2016, May. On the root causes of cross-application I/O interference in HPC storage systems. In 2016 IEEE International Parallel and Distributed Processing Symposium (IPDPS) (pp. 750-759). IEEE.